Building a cool chatbot

Concepts

Natural Language Processing (NLP) is a field of Artificial Intelligence that enables computers to analyze and understand the human language.

Natural Language Understanding (NLU) is a subset of a bigger picture of NLP, just like machine learning, deep learning, NLP, and data mining are a subset of a bigger picture of Artificial Intelligence (AI), which is an umbrella term for any computer program that does something smart.

spaCy is an open-source software library for advanced NLP, written in Python and Cython. It provides intuitive APIs to access its methods trained by deep learning models.

Cornerstones

Before we actually dive into spaCy and code snippets, make sure we have the necessary setup ready.

1 | mkdir -p ~/chatBot && cd ~/chatBot |

spaCy models are just like any other machine learning or deep learning models. A model is a yield of an algorithm or, say, an object that is created after training data using a machine learning algorithm. spaCy has lots of such models that can be placed directly in our program by downloading it just like any other Python package.

1 | python -m spacy download zh_core_web_lg |

POS Tagging

Part-of-speech (POS) tagging is a process where you read some text and assign parts of speech to each word or token, such noun, verb, adjective, etc.

POS tagging becomes extremely important when you want to identify some entity in a given sentence. The first step is to do POS tagging and see what our text contains.

Let’s get our hands dirty with some of the examples of real POS tagging.

1 | import spacy |

Stemming and Lemmatization

Stemming is the process of reducing inflected words to their word stem, base form.

A stemming algorithm reduces the words “saying” to the root word “say”, whereas “presumable” becomes “presum”. As you can see, this may or may not always be 100% correct.

Lemmatization is closely related to stemming, but lemmatization is the algorithmic process of determining the lemma of a word based on its intended meaning.

spaCy doesn’t have any in-built stemmer, as lemmatization is considered more correct and productive. (spaCy 没有内置的词干提取器,因为词形还原被认为更加准确和有效。)

Difference between Stemming and lemmatization:

- Stemming does the job in a crude, heuristic way that chops off the ends of words, assuming that the remaining word is what we are actually looking for, but it often includes the removal of derivational affixes.

- Lemmatization tries to do the job more elegantly with the use of a vocabulary and morphological analysis of words. It tries its best to remove inflectional endings only and return the dictionary form of a word, konwn as the lemma.

1 | from nltk.stem.porter import PorterStemmer |

1 | import spacy |

Since you are pretty much aware what a stemming or lemmatization does in NLP, you should be able to understand that whenever you come across a situation where you need the root form of the word, you need to do lemmatization there. For example, it is often used in building search engines. You must have wondered how Google gives you the articles in search results that you meant to get even when the search text was not properly formulated.

This is where one makes use of lemmatization.

Imageine you search with the text, “When will the next season of Game of Thrones be releasing?”

Now, suppose the search engine does simple document word frequency matching to give you search results. In this case, the aforementioned query probably won’t match an article with a caption “Game of Thrones next season release date”.

If we do the lemmatization of the orginal question before going to matchh the input with the documents, then we may get better results.

Named-Entity Recognition

NER, also known by other names like entity identification or entity extraction, is a process of finding and classifying named entities existing in the given text into pre-defined categories.

The NER task is hugely dependent on the knowledge base used to train the NE extraction algorithm, so it may or may not work depending upon the provided dataset it was trained on.

spaCy comes with a very fast entiry recognition model that is capable of identifying entity phrases from a given document. Entities can be of different types, such as person, location, organization, dates, numerals, etc. These entities can be accessed through .ents property of the doc object.

1 | import spacy |

Stop Words

Stop words are high-frequency words like a, an, the, to and also that we sometimes want to filter out of a document before further processing. Stop words usually have little lexical content and do not hold much of meaning.

1 | from spacy.lang.zh.stop_words import STOP_WORDS |

Tosee if a word is a stop word or not, you can use the nlp object of spaCy. We can use the nlp object’s is_stop attribute.

1 | nlp.vocab["的"].is_stop |

Dependency Parsing

Dependency parsing is one of the more beautiful and powerful features of spaCy that is fast and accurate. The parser can also be used for sentence boundary detection and lets you iterate over base noun phrases, or “chunks”.

This feature of spaCy gives you a parsed tree that explains the parent-child relationship between the words or phrases and is indenpendent of the order in which words occur.

1 | import spacy |

Ancestors are the rightmost token of this token’s syntactic descendants.

To check if a doc object item is an ancestor of another doc object item programmactically, we can do the following:

doc[3].is_ancestor(doc[5])

The above returns True because doc[3] (i.e., flight) is an ancestor of doc[5] (i.e., Bangalore).

Children are immediate syntactic dependents of the token. We can see the children of a word by using children attribute just like we used ancestors.

list(doc[3].children) will output [a, from, to]

Dependency parsing is one the most important parts when building chatbots from scratch. It becomes far more important when youn want to figure out the meaning of a text input from your user to your chatbot. There can be cases when you haven’t trained your chatbots, but still you don’t want to lose your customer or reply like a dumb machine.

In these cases, dependency parsing really helps to find the relation and explain a bit more about what the user may be asking for.

If we were to list things for which dependency parsing helps, some might be:

- It helps in finding relationships between words of grammatically correct sentences.

- It can be used for sentence boundary detection.

- It is quite useful to find out if the user is talking about more than one context simulationeously.

You need to write your own custom NLP to understand the context of the user or your chatbot and, based on that, identify the possible grammatical mistakes a user can meke.

All in all, you must b ready for such scenarious where a user will input garbage values or grammatically incorrect sentences. You can’t handle all such scenarios at once, but you can keep improve you chatboot by adding custom NLP code or by limiting user input by design.

Noun Chunks

Noun chunks or NP-chunking are basically “base noun phrases”. We can say they are flat phrases that have anoun as their head. You can think of noun chunks as a noun with the words describing the noun.

1 | import spacy |

The ‘noun_chunks’ syntax iterator is not implemented for language ‘zh’

Finding Similarity

Finding similarity between two words is a use-case you will find most of the time working with NLP. Sometimes it becomes fairly important to find if two words are similar. While building chatbots you will often come to situations where you don’t have to just find similar-lokking words but also how closely related two words are logically.

spaCy uses high-quality word vectors to find similarity between two words using GloVe algorithm (Global Vectors for Word Representation).

GloVe is an unsupervised learning algorithm for obtaining vector representations for words. GloVe algorithm uses aggregated global word-word co-occurrence statistics from a corpus to train the model.

1 | import spacy |

Seeing this output, it doesn’t make much sense and meaning. From an application’s perspective, what matters the most is how similar the vectors of different words are – that is, the word’s meaning itself.

In order to find similarity between two words in spaCy, we can do the following.

1 | import spacy |

The word ‘car’ is more related and similar to the word ‘truck’ than the word ‘plane’.

We can also get the similarity between sentences.

1 | import spacy |

As we can see in this example, the similarity between both of the sentences is about 82%, which is good enough to say that both of the sentences are quite similar, which is true. This can help us save a lot of time for writing custom code when build chatbots.

Tokenization

Tokenization is one of the simple yet basic concepts of NLP where we split a text into meaningful segments. spaCy first tokenizes the text (i.e., segments it into words and then punctuation and other things). A question might come to your mind: Why can’t I just use the built-in split method of Python language and do the tokenization? Python’s split method is just a raw method to split the sentence into tokens given a sepatator. It doesn’t take any meaning into account, whereas tokenization tries to preserve the meaning as well.

1 | import spacy |

If you are not satisfied with spaCy’s tokenization, you can use its add_special_case method to add your own rules before relying completely on spaCy’s tokenization method.

Regular Expressions

You must already know about regular expressions and their usage.

Text analysis and processing is a big subject in itself. Sometimes words play together in a way that makes it extremely difficult for machines to understand and get trained upon.

Regular expression can come handy for some fallback for a machine learning model. It has the power of pattern-matching, which can ensure that the data we are processing is correct or incorrect. Most of the early chatbots were hugely dependent on pattern-matching.

Given the power of machine learning these days, regular expression and pattern-matching has taken a back step, but make sure you brush up a bit about it as it may be needed at any time to parse specific details from words, sentences, or text documents.

The Hard Way

“Building Chatbots the Hard Way” is not to hard to learn. It’s the hard way of building chatbots to have full control over your own chatbots. If you want to build everything yourself, then you take the hard route. The harder route is hard when you go through it but beautiful and clear when you look back.

It is a rough road that leads to the heights of etness. – Lucius Annaeus Seneca

1 | pip install rasa==3.4.0 |

构建Rasa机器人的步骤

- 初始化项目

- 准备NLU训练数据

- 准备故事story

- 定义领域domain

- 定义规则rule

- 定义动作action

- 配置config

- 训练模型

- 测试机器人

- 发布机器人

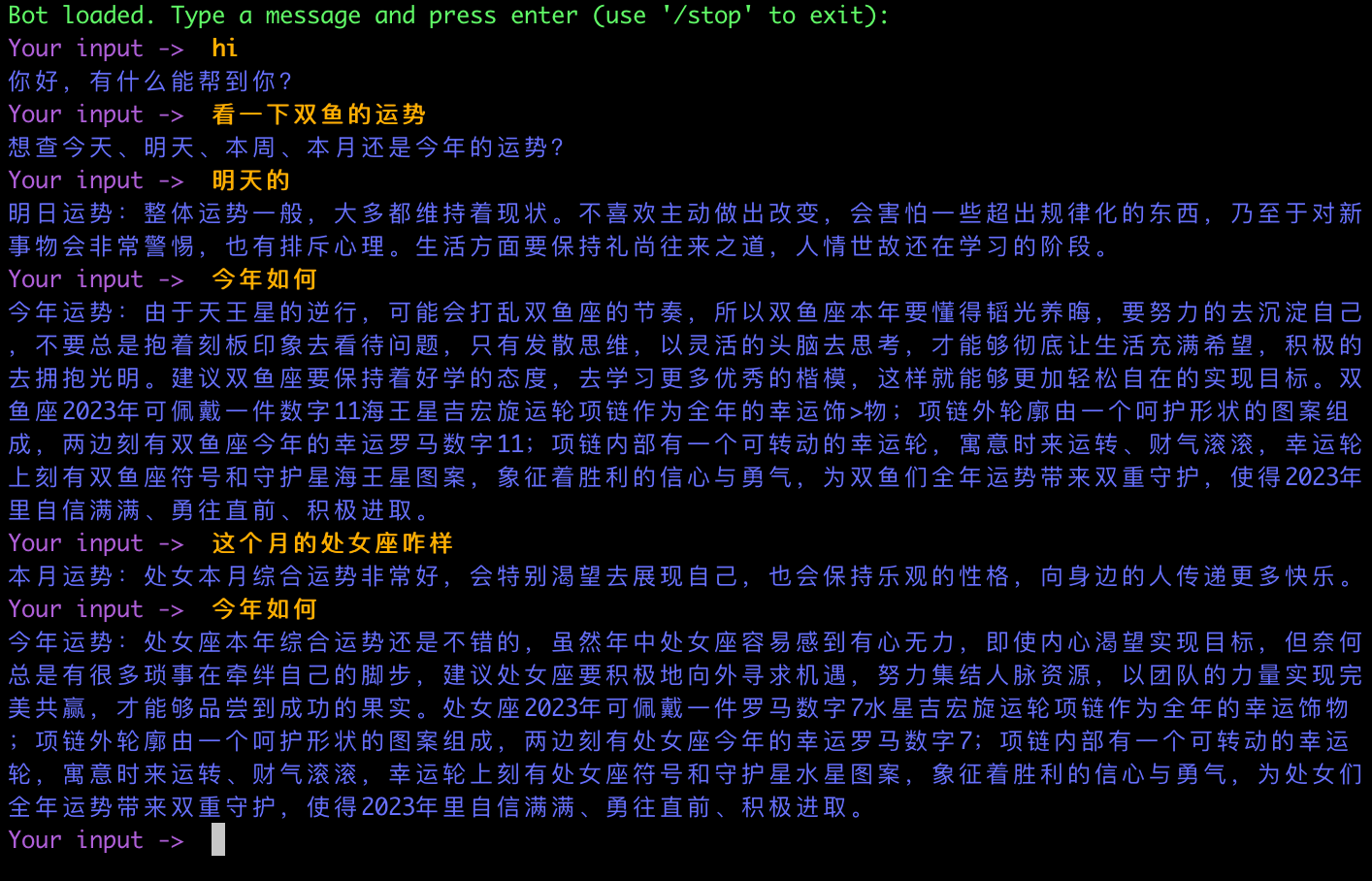

Building a Simple Horoscope Bot

Let’s decide the scope of this chatbot and see what it does and can do.

- The Horoscope Bot should be able to understand greetings and reply with a greeting.

- The bot should be able to understand if there user is asking for horoscope.

- The bot should be able to ask the horoscope sign of the user if the user doesn’t provide it.

- The bot should learn from existing responses to formulate a new response.

It is pretty simple what our bot is supposed to do here.

Possible intents

- Greeting Intent: User starting with a greeting

- Get Horoscope Intent: User asking for horoscope

- User’s Horoscope Intent: User telling the horoscope sign

We’ll try to build the bot that does the basic task of giving a horoscope.

Let’s create a possible conversation script between our chatbot and the user.

User: Hello

Bot: 你好,有什么能帮到你?

User: 看一下今年的运势

Bot: 想查哪个星座的运势?

User: 双鱼的

Bot: 由于天王星的逆行,可能会打乱双鱼座的节奏,所以双鱼座本年要懂得韬光养晦,要努力的去沉淀自己...

This conversation is just to have a fair idea of how our chatbot conversation is going to look.

We can have our chatbot model itself trained to prepare a valid response instead of writing a bunch of if ... else statements.

Initializing the bot

Let’s init our bot.

1 | cd ~/chatBot |

Preparing data

First, prepare the nlu data. (nlu负责意图提取和实体提取)

The following is what my nlu.yml under data folder looks like:

1 | version: "3.1" |

Then, prepare the stories data (Rasa是通过学习story的方式来学习对话管理知识).

The following is what my stories.yml under data folder looks like:

1 | version: "3.1" |

Then, prepare the domain data (domain定义了chatbot需要知道的所有信息,包括intent, entity, slot, action, form, response).

The following is what my domain.yml under project root looks like:

1 | version: "3.1" |

Then, prepare the rule (rule负责将问题分类映射到对应的动作上).

The following is what my rules.yml under data folder looks like:

1 | version: "3.1" |

Then, prepare the action (action接收用户输入和对话状态信息,按照业务逻辑进行处理,并输出改变对话状态的事件和回复用户的消息).

The following is what my actions.py under actions folder looks like:

1 | class ActionGetHoroscope(Action): |

Then, prepare the config.

The following is what my config.yml under project root looks like:

1 | recipe: default.v1 |

Training

1 | rasa train |

Test

1 | rasa test |

Ok, if everything goes well, we’ve got a simple chatbot out there.

Run

Run an action server.

1 | rasa run actions --actions actions.actions |

Run a shell

1 | rasa shell |

Let’s play with it in that shell.

Try it out for yourself.

References

- Blog Link: https://neo1989.net/HandMades/HANDMADE-build-a-cool-chatbot-step-by-step/

- Copyright Declaration: 转载请声明出处。